The advent of generative artificial intelligence and the widespread adoption of it in society engendered intensive debates about its ethical implications and risks. These risks often differ from those associated with traditional discriminative machine learning. To synthesize the recent discourse and map its normative concepts, I conducted a scoping review on the ethics of generative artificial intelligence, including especially large language models and text-to-image models. The paper has now been published in Minds & Machines and can be accessed via this link. It provides a taxonomy of 378 normative issues in 19 topic areas and ranks them according to their prevalence in the literature. The new study offers a comprehensive overview for scholars, practitioners, or policymakers, condensing the ethical debates surrounding fairness, safety, harmful content, hallucinations, privacy, interaction risks, security, alignment, societal impacts, and others.

Machine Psychology update

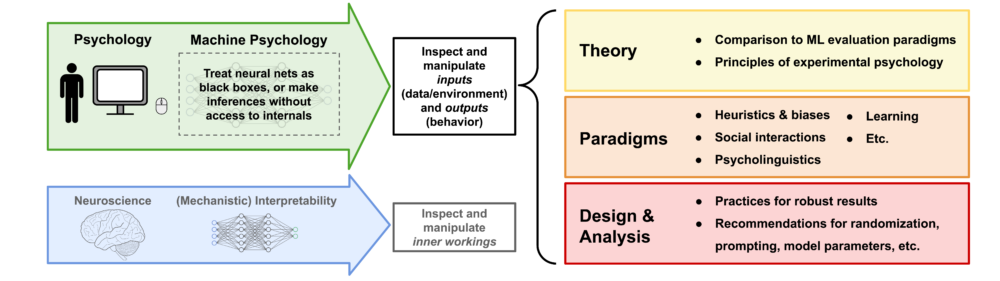

In 2023, I published a paper on machine psychology, which explores the concept of treating LLMs as participants in psychological experiments to explore their behavior. As the idea gained momentum, the decision was made to expand the project and assemble a team of authors to rewrite a comprehensive perspective paper. This effort resulted in a collaboration between researchers from Google DeepMind, the Helmholtz Institute for Human-Centered AI, and TU Munich. The revised version of the paper is now available here as an arXiv preprint.

Podcast on my latest research

I recently had the pleasure of engaging in an extensive conversation with Stephan Dalügge for his podcast Prioritäten. If you’re interested, you can listen to the first of two episodes here (in German):

To support the incredible work Stefan is doing, please subscribe to his podcast on Apple Podcasts or Spotify.